Automated Document Processing With Dexit: Behind the Scenes of AI Document Extraction & Classification

20 March, 2025 | 8 Min | By Srivatsan SridharDexit stands out with its ability to classify documents, extract key entities, and enable seamless auto-indexing — redefining efficiency in automated document processing and management.

At the heart of Dexit’s performance is a cutting-edge approach that emulates human comprehension of documents. By leveraging visual and spatial context, Dexit accurately categorizes files — from forms and invoices to complex medical reports. What sets Dexit apart is its independence from traditional identifiers like document-type templates or patient-identifying barcodes. Instead, its advanced entity extraction capabilities identify and retrieve critical details, such as patient names, dates, and addresses, with unmatched precision.

This synergy of intelligent classification, robust entity extraction, and fast, automated indexing makes Dexit an indispensable tool for organizations managing vast and complex document repositories. Users can locate relevant files effortlessly, based on content rather than predefined tags, saving time and reducing errors.

However, arriving at this final solution was no straightforward journey. Our team explored, tested, and refined multiple approaches for AI document extraction and classification. Here’s a closer look at the paths we took, the challenges we overcame, and the insights that shaped Dexit’s ultimate design.

What We Cover:

Document Type Classification

Image Similarity Metrics

To tackle document classification, we initially experimented with image similarity metrics, relying on visual cues to determine document types. The approach involved sliding through pages of documents, and comparing the current page to the previous one to identify visual differences that could signal a page break. Pages with high visual similarity were grouped together as part of the same document.

We tested several metrics:

- Structural Similarity Index Measure (SSIM) - Measures the similarity between two images based on luminance, contrast, and structure.

- Mean Squared Error (MSE) - Calculates the average squared difference between corresponding pixels in two images.

- Mean Absolute Error (MAE) - Measures the average absolute difference between corresponding pixels.

- Normalized Cross-Correlation (NCC) - Computes the correlation between two images after normalization.

- Peak Signal-to-Noise Ratio (PSNR) - Assesses the quality of a reconstructed image compared to a reference.

While testing SSIM yielded an accuracy of 71% for detecting page breaks at a specific threshold, the overall precision of these methods remained around 70%. A major limitation here was their inability to account for textual content, leading to misclassifications when documents of different types had visually similar layouts.

Fusion Strategy

To overcome these limitations, we experimented with a fusion strategy, combining image classification models (such as CNN and ResNet) with text-based classification models (e.g., ClinicalBERT). This hybrid approach leveraged both visual and textual information to improve classification accuracy.

The strategy involved extracting text through Optical Character Recognition (OCR) identifying keywords or phrases, and combining the classification probabilities from both image and text models via a sliding window technique. While this approach improved accuracy, it failed to handle spatial relationships between text elements, which proved critical for correctly classifying documents with complex layouts, such as forms and invoices.

Document Type Classification - Our Final Solution

The final document classification solution utilizes a State-of-the-Art (SOTA) image classification model, optimized for document understanding. This model excels by integrating both visual and spatial information, making it highly effective for documents with structured layouts like forms and invoices. Unlike traditional text-based models, which struggle with complex document structures, this SOTA model processes both the visual appearance and spatial arrangement of elements within the document to classify it accurately.How

Dexit’s AI Classifies Documents:

- Data Collection and Preprocessing: Dexit collects a variety of client documents which can consist of scanned images, faxes, etc. Each of these images are marked with the corresponding document type label which the model needs for understanding the data. The images are then preprocessed to remove noise using preprocessing techniques from OpenCV and SOTA libraries, to clean and prepare the data for classification.

- Fine-tuning: Post-preprocessing, we split the dataset into training and testing sets, ensuring an even distribution of document types. The model is fine-tuned on the training data, adjusting parameters (e.g., learning rate, batch size) via hyperparameter tuning to optimize classification accuracy.

- Validation: After fine-tuning, we validate the model’s performance using standard metrics—accuracy, precision, recall, and F1 score—and visualize the results through a confusion matrix to assess performance across all document types.

- Probability Assignment and Prediction: When a document is fed into the model, it generates probabilities for each possible document type. The document type with the highest probability is assigned as the predicted category.

When trained on just 30,000 images from 100 unique document types that were of varying layouts, our final solution was able to provide an overall accuracy of 97% in classification. There were certain document types that had a lot of variations compared to the rest and we were able to observe that the accuracy of such documents was around 85%. This can certainly be improved with more training documents specifically for such document types with high variation in layout.

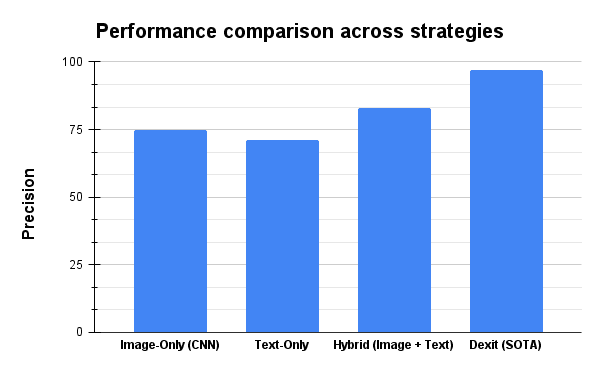

Performance comparison of different document classification models and fusion strategies

Entity Extraction

For entity extraction, we explored several initial approaches before arriving at a fine-tuned model. These included DocQuery, InternVL 2.0, and BERT for question answering. After extensive evaluation, we discarded these methods due to several critical limitations, as outlined below:

DocQuery

DocQuery, a library designed for analyzing semi-structured and unstructured documents, initially showed promise for extracting entities. However, we faced two significant challenges:

- Lack of Maintenance and Support: DocQuery has been discontinued, which raised concerns about long-term viability. With no ongoing support, issues that arose during integration couldn’t be addressed, making scaling unreliable.

- Limited Adaptability: Without continuous updates, DocQuery struggled to adapt to new document formats or layouts, restricting its ability to handle a wide range of documents effectively.

InternVL 2.0

InternVL 2.0, a multimodal model designed to handle long texts, images, and videos, demonstrated competitive performance across a range of tasks. However, it wasn’t suitable for entity extraction due to the following reasons:

- Focus on Question Answering: InternVL 2.0 is optimized for question answering, not entity extraction. The architecture and training strategies for QA tasks differ significantly from those for entity extraction, where fine-tuning for token-level information is paramount.

- Potential for Lower Accuracy: In our testing, InternVL’s performance was lower in terms of precision and recall for entity extraction. Documents with varied layouts and scattered text across different sections presented challenges that InternVL could not adequately address.

BERT for Question Answering

While BERT is a powerful model for question answering, applying it directly to entity extraction came with its own set of challenges:

- Requirement for Extensive Data Cleaning: Using BERT for raw document text required significant pre-processing (e.g., removing irrelevant text, correcting errors, and structuring content) to achieve acceptable results. This added complexity and computational overhead, reducing efficiency.

- Lack of Layout Awareness: Traditional BERT models lack inherent awareness of spatial layout, which is critical for entity extraction in structured documents. In cases where layout contributes to the meaning (such as forms with multiple sections), this limitation led to less accurate extraction.

While DocQuery, InternVL 2.0, and BERT for question answering each offer potential for entity extraction, they present limitations that hinder their effectiveness compared to a fine-tuned model. DocQuery's lack of maintenance, InternVL 2.0 focus on question answering, and BERT's need for extensive pre-processing make them less desirable options.

In contrast, our fine-tuned model’s token-level annotations, and optimization through fine-tuning make it a more accurate, efficient, and robust solution for entity extraction in Dexit.

Entity Extraction - Our Final solution

Dexit’s AI employs two distinct methods for entity extraction:

- Fine-tuned Model for Entity Extraction

- Out-of-the-Box Solution Combining Optical Character Recognition (OCR) and LLM

Fine-tuned Model for Entity Extraction

The fine-tuned model is specifically designed for documents undergoing auto-indexing. This approach integrates both OCR and spatial information to ensure high accuracy in entity extraction. The process includes several key stages:

1. Training Data Preparation: For training the model, we make use of the following inputs:

a. The full image as it is.

b. The OCR text from the image:

The document images are processed with a State-of-the-Art OCR engine to extract text. As part of the OCR process, token-level bounding boxes are generated for individual words or characters, capturing fine-grained spatial information about each token.

c. The key:value pairs of the entities that are present in the image.

The key:value pairs along with the OCR text are compiled into a JSON-format labeled

dataset by following some post-processing techniques. This dataset serves as the

foundation for fine-tuning the model, allowing it to learn both the textual context and

spatial relationships of the entities within the document.

2. Fine-tuning: The data is split into train/val/test datasets following a 70/10/20 split. The model is fine-tuned on the train dataset and is also validated on the val dataset in parallel.

3. Inference and Validation: During inference, the image is input into the system where OCR is applied, generating token-level boxes. The model predicts entity labels for each box, which are then compared against a test dataset to validate performance. Ideally, all boxes within a multi-word entity should be correctly identified. This precision is crucial for tasks like auto-indexing, where accuracy directly impacts downstream processing.

Advantages of a Fine-tuned Model

- Inherent Layout Awareness: Our SOTA entity extraction model is explicitly designed to understand and leverage the spatial relationships between text elements, a crucial factor for accurate entity extraction in structured documents. This inherent layout awareness eliminates the need for additional preprocessing steps to incorporate layout information.

- Token-level Annotations for Granularity: The model employs token-level annotations, enabling it to predict entities at the word level. This fine-grained approach allows for the precise extraction of multi-word entities, enhancing the accuracy and granularity of the extracted information.

- Optimized for Entity Extraction: Fine-tuning using manually labeled data ensures the model is specifically tailored for the entity extraction task. This targeted training process optimizes the model's parameters to excel in extracting the desired entities from the target documents.

Out-of-the-Box Solution (OCR + LLM)

Dexit’s AI also uses an alternative method for documents that don't require indexing but might be queried using natural language. This method comprises the following steps:

- OCR: Dexit uses the SOTA OCR engine, chosen for its superior accuracy even with handwritten text. Image preprocessing, including resizing, denoising, and binarization, is crucial for optimizing OCR accuracy.

- Text Layout Preservation: Dexit maintains the spatial layout of the extracted text as accurately as possible to mirror its original format. This is vital for the LLM's ability to extract entities accurately. Without layout preservation, the entity extraction accuracy can decrease by 40-50%.

- Entity Extraction With SOTA LLM: Dexit uses a SOTA open-source LLM model, chosen for its performance, with a custom prompt to extract specific entities from the text. This prompt instructs the model to provide the extracted information in a JSON format.

Here’s Dexit’s way of handling document classification and entity extraction in action:

Building Dexit’s automated document processing wasn’t just about choosing the right models—it was about refining strategies, optimizing performance, and balancing accuracy with real-world constraints. With a fusion of state-of-the-art classification, entity extraction, and auto-indexing, Dexit provides a scalable, high-precision solution for handling complex healthcare document workflows.

And this is just the beginning. As we continue to iterate and improve, we push the limits of AI in document understanding—making automation smarter, faster, and more adaptable with every step.

Stay on Top of Everything in Healthcare IT

Join over 3,200 subscribers and keep up-to-date with the latest innovations & best practices in Healthcare IT.